Serenity and compassion in trying times

Eulogothymia: optimal balance of cognitive regulation of emotion, not too tight, not too loose

This is a quick dump to get this out there, further discussion and documentation to be provided over time.

Google Trends doesn’t have a real public API; it’s heavily rate limited and so it’s hard to get even moderate amounts of data, and large amounts are right out. I worked with pytrends and scripted some delays and automation for downloading larger amounts of trend data for lists of search terms.

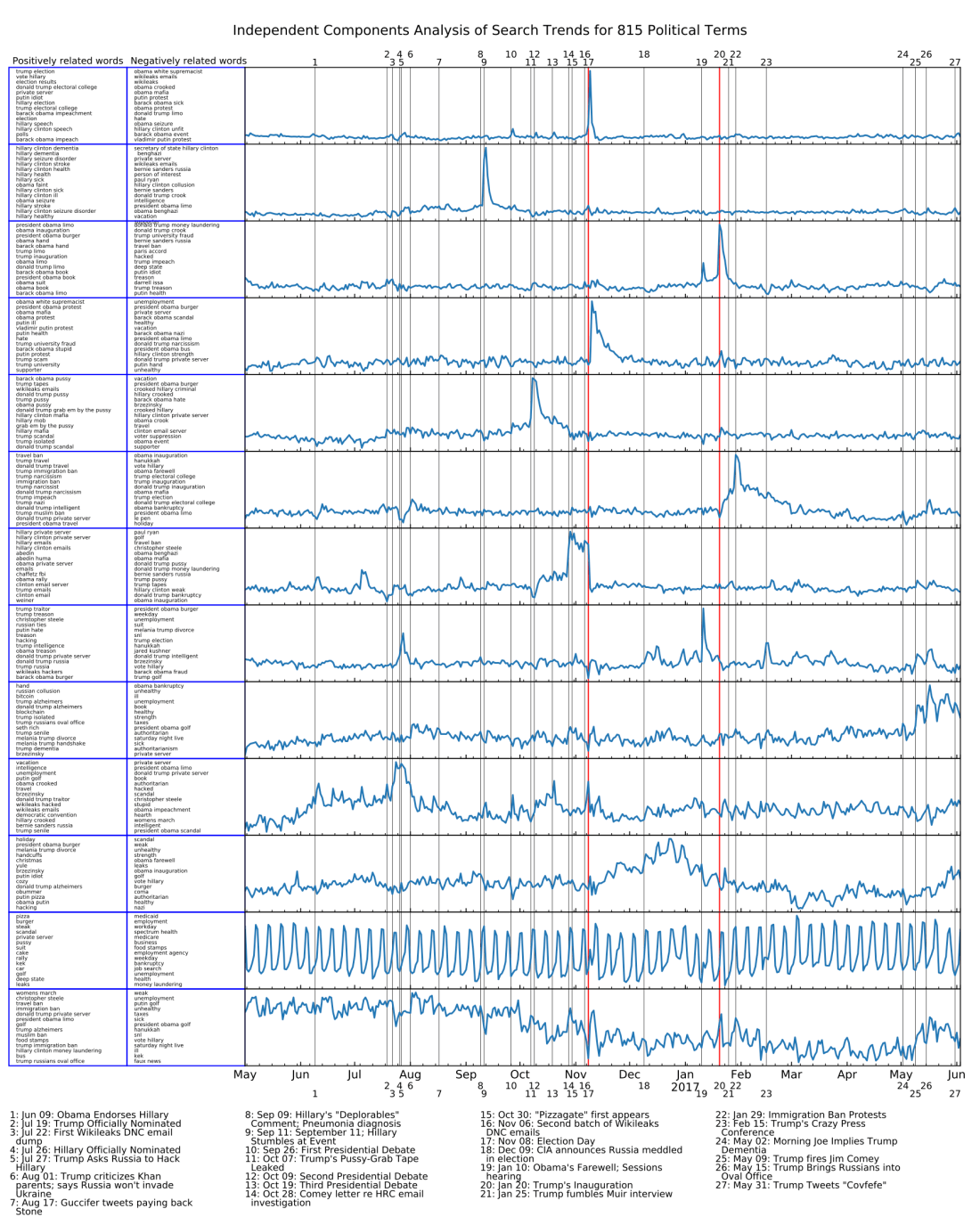

I used this to download just over a year’s worth of day-by-day trend data for (currently) 815 search terms, mostly related to current political events. Then I subjected this matrix to Independent Component Analysis using Scikit-learn, which turns out to do a great job of separating out components with clear meaning on the events timeline. The components are displayed on a stacked plot with key political events labeled on the timeline. Some of the political events I entered a priori because they were obvious (like the election itself), and then some of them were entered after I researched archived news corresponding to peaks I saw in the plots. Note that many of the events in the latter categories were things that I wasn’t thinking about at all when I put together the list of search terms, but the ICA on the large set of terms brings out those peaks automatically.

Here’s an example plot with 13 components (choosing number of components for ICA is more art than science, 13 seemed to work well but other numbers did too):

There are a few other interesting trends graphs I’ve seen online. Here I’ve placed two big ones overlaid on that plot, for comparison of timelines. The first one is the plot of Alfa bank DNS server logs, which may or may not have something to do with anything nefarious. The second one is from Echelon Insights‘ annual promotional “year in news” article. Of course I don’t know their exact methodology (the point is that they’re selling their service, after all) but it’s likely that the trends displayed here are based on starting with the keywords labeling the peaks, rather than a component analysis of some sort. I would love to hear from someone who knows more.

The columns at the left are the top 15 terms, and bottom 15 terms, associated with that component. The positively associated search terms trend much more frequently when you see a positive spike in the associated component. The negatively associated search terms trend much more frequently when you see a negative spike in the component, or when the overall value of the component is low. The code automatically detects the spikes and rectifies the signal (ICA outputs are unpredictably, if not arbitrarily, scaled, so some ordering and rectifying are helpful) so the positively associated terms are usually more meaningful, but there are some interesting trends in the negative words too.

To improve visualization and insight related to the search terms, the code also generates word clouds of the top positively and negatively associated terms. Here they are in the same order as on the left side of the plot.

Although this is preliminary, I can make a few observations here.

Please consider this a preliminary post, I wanted to get these tools and data out there. You can access all the code and data at my GitHub profile here. (As of this writing, 2017 June 6, I haven’t added documentation yet, and I probably won’t have time to for a few weeks. Sorry!)

Here’s pdf versions of the big images above:

icacomponents13, icacomponents13_annotated, icacomponents15

To do:

If you’ve been even remotely paying attention to America recently, you’ve heard the word “emolument” quite a few times, maybe for the first time. And if you’re really paying attention, you might have noticed that it sounds a lot like “emollient”… like moisturizing lotion.

So, you’ve heard the word “emoluments”, and hopefully it’s clear from the context that “emolument” means something like bribes and kickbacks: some kind of illicit payment for favorable treatment. Merriam-Webster says “the returns arising from office or employment usually in the form of compensation or perquisites”; Dictionary.com says “profit, salary, or fees from office or employment; compensation for services: Tips are an emolument in addition to wages.” That doesn’t sound like a word that means something unethical. But! We’re hearing the word in the context of “the Emoluments Clause to the US Constitution”, Article 1, Section 9, Clause 8, which reads:

No Title of Nobility shall be granted by the United States: And no Person holding any Office of Profit or Trust under them, shall, without the Consent of the Congress, accept of any present, Emolument, Office, or Title, of any kind whatever, from any King, Prince, or foreign State. –ARTICLE I, SECTION 9, CLAUSE 8

OK, so this is where the “bribery” connotation comes in: In brief, the Emoluments Clause says that no “Person holding any Office” in the US Government, shall accept any foreign profit, salary, fees, compensation, etc. It’s obvious why: the Founders didn’t want the sovereignty of the new nation compromised by having leaders influenced by payments from other nations or rulers. If the officials in question might have considered the foreign power a threat, the payment might appease that concern and lead to more favorable treatment than what was really best for the nation.

Back to etymology. Our hypothetical official, he got bribed, and his concerns were mollified. Mollified means “softened in temper, appeased, pacified“, and the word is derived from the Latin roots “mollis”, meaning “soft”, and “ficere”, to make. So to mollify someone is to make them soft, etymologically. And “emollient” is also from the Latin root “mollis”, and still explicitly refers to “making soft”, because that’s what moisturizing lotion is supposed to do.

I was hoping that I’d look up the etymology of “emolument” and find the obvious connection I’ve implied above. But no, the Online Etymology Dictionary says:

mid-15c., from Old French émolument “advantage, gain, benefit; income, revenue” (13c.) and directly from Latin emolumentum “profit, gain, advantage, benefit,” perhaps originally “payment to a miller for grinding corn,” from emolere “grind out,” from assimilated form of ex “out” (see ex-) + molere “to grind” (see mallet).

Drat! That’s not what I had hoped for. “Emoluments” is cognate with “mill”, a grinder, because it was originally a Latin word for paying millers for grinding corn. (“Corn” is an archaic word for any grain, because modern American “corn” is native to the New World, and the Romans didn’t have it.)

But hold on a minute, we’re in luck. After some more research, I came across the longer and more detailed etymological exploration of the word “mild“:

Old English milde “gentle, merciful,” from Proto-Germanic *milthjaz- (source also of Old Norse mildr, Old Saxon mildi, Old Frisian milde, Middle Dutch milde, Dutch mild, Old High German milti, German milde “mild,” Gothic mildiþa “kindness”).

This is from PIE *meldh-, from the root *mel- (1) “soft,” with derivatives referring to soft or softened materials (source also of Hittite mallanzi “they grind;” Armenian malem “I crush, bruise;” Sanskrit mrdh “to neglect,” also “to be moist;” Greek malakos “soft,” malthon “weakling,” mylos “millstone,” myle“mill;” Latin molere “to grind,” mola “millstone, mill,” milium “millet;” Old Irish meldach “tender;” Old English melu “meal, flour;” Albanian miel “meal, flour;” Old Church Slavonic meljo, Lithuanian malu “to grind;” Old Church Slavonic mlatu, Russian molotu “hammer”).

Bingo! The Latin molere, “to grind”, is derived from the Proto-Indo-European root mel-, “soft”, by way of the sense that grinding in a mill starts with hard grains and results in soft flour. So as I suspected, “emolument” is cognate with “emollient” (and “mollify”), through a roundabout etymological path of linguistic evolution.

It was especially amusing to learn that in addition to this, “mill”, “mild”, and “mallet” in English, as well as several other languages’ words for “hammer”, “crush”, “bruise”, “soft”, “weakling”, and “tender”, are all also cognate with our host today.

I’ll conclude this essay by quoting the ultra-conservative, Reagan-worshiping Heritage Foundation‘s essay on the Emoluments Clause of the Constitution:

…Similarly, the Framers intended the Emoluments Clause to protect the republican character of American political institutions. “One of the weak sides of republics, among their numerous advantages, is that they afford too easy an inlet to foreign corruption.” The Federalist No. 22 (Alexander Hamilton). The delegates at the Constitutional Convention specifically designed the clause as an antidote to potentially corrupting foreign practices of a kind that the Framers had observed during the period of the Confederation… Wary, however, of the possibility that such gestures might unduly influence American officials in their dealings with foreign states, the Framers institutionalized the practice of requiring the consent of Congress before one could accept “any present, Emolument, Office, or Title, of any kind whatever, from…[a] foreign State.”

St. George Tucker’s explanation of the clause noted that “in the reign of Charles the [S]econd of England, that prince, and almost all his officers of state were either actual pensioners of the court of France, or supposed to be under its influence, directly, or indirectly, from that cause. The reign of that monarch has been, accordingly, proverbially disgraceful to his memory.” As these remarks imply, the clause was directed not merely at American diplomats serving abroad, but more generally at officials throughout the federal government.

Have you ever watched rain running down a window on a rainy day? Droplets merge into rivulets, they twist and stream around. Sometimes two rivulets come together, their flows merge and become a larger rivulet to the bottom of the window.

Have you ever seen a place where two rivers run together? Water flows off the land when it rains and into channels and then together and together and together and then eventually into the oceans. And at some places, a couple of those channels are both big enough that we call it two rivers running together.

For almost every single river on the planet, Humans have traced from the oceans up each river to each of those merging places, and at each Y-shape they decided one fork is the “real” river, which gets to keep the name. And the other one is a separate river, which gets called a “tributary”, and never gets to see the ocean.

This is reality: Water flows over the earth and through a vast network of merging channels into the oceans.

But humans are so obsessed with forcing nature into categories that they have gone over the whole planet and at every merging of two rivers they decided which one is to blame for the output river, and which one just ends there.

OK, I’ll grant this much: practically speaking, you have to call each river something, and this system is probably the best we could do for coming up with what to call them. But there’s a real loss if you forget that it’s all just water. The rivers are what they are; but if you forget something, then that simply means you’ve failed to understand nature very well.

You’re probably reading this as a spiritual metaphor or a philosophical musing, or some such thing. But I have a Ph.D. in psychology, not either of those. I’m writing this to point out that the way ordinary human minds work, we have a strong bias towards forgetting that everything flows together. When something happens, we want to know who is to blame and who was an innocent victim. We want to know who wins and who loses. We want to know what’s right and what’s wrong, who is good and who is evil. We want to know these things partly because we want to be saved from the anxiety that comes from not having neat clean answers; the anxiety that comes from not being able to classify, name, and control everything that happens to us.

Some people more so than others, so maybe you’re reading this and it doesn’t resonate with you… in which case, consider yourself lucky!

Remember that just like the names of the rivers are made up, so our names for any causal relationships are made up. Causal relationships are much less limited than our names for them. When we blame someone else for something that happens, then we give ourself the name of “tributary” and say that our role ended there. And when we blame ourselves, we cut off the others too. And neither of those is completely true. Yes, it’s practically useful to say that it goes one way or the other in the cases where it’s clear-cut, but you’re always losing information when you do that.

This paper got some attention and amusement when it came out a few years ago:

Constraints on the Universe as a Numerical Simulation

Observable consequences of the hypothesis that the observed universe is a numerical simulation performed on a cubic space-time lattice or grid are explored. The simulation scenario is first motivated by extrapolating current trends in computational resource requirements for lattice QCD into the future. Using the historical development of lattice gauge theory technology as a guide, we assume that our universe is an early numerical simulation with unimproved Wilson fermion discretization and investigate potentially-observable consequences. Among the observables that are considered are the muon g − 2 and the current differences between determinations of α, but the most stringent bound on the inverse lattice spacing of the universe, b−1>∼ 1011 GeV, is derived from the high-energy cut off of the cosmic ray spectrum. The numerical simulation scenario could reveal itself in the distributions of the highest energy cosmic rays exhibiting a degree of rotational symmetry breaking that reflects the structure of the underlying lattice.

A friend brought up this question in a Facebook thread recently. Here’s my response.

OK here’s the thing. If the function of the simulation is to simulate physics, for whatever reason, and our awareness is epiphenomenal to that goal, then it would make sense to run it as a finite-element simulation like the cubic grid posited in that paper. However, on the other hand, if you ask the question “what would be the most efficient way to simulate what I seem to be experiencing” the answer is undoubtedly to have a neural-net-based simulation generating your own consciousness and feeding it stimuli based on an attention-driven sparse simulation of only the relevant parts of the rest of the universe. In that structure of simulation, there’s no reason at all why you would see any artifacts in the outside world because the outside world is generated only to an awareness-centric spec.

It’s also possible that it could be a hybrid, with a global universe buffer simulation used to provide an optimized sparse consistency across different minds’ awarenesses.

In any case, you run into the same problem philosophy has run into for all of time, which is that YOU don’t actually have any proof that the rest of us aren’t NPCs. The simulation has to generate a lot of information for you to feel like you are you, but it only has to generate an insignificantly small fraction of that amount of information for you to perceive me, so it wouldn’t really seem to be efficient to simulate me at the same level of richness as it’s simulating you, merely for the purpose of filtering out 99.9999999% of all that and feeding you the tiny remainder.

The standard response to that is “yeah, but it’s unlikely that you’re so special, so don’t fall into the trap of solipsism”. But that doesn’t actually make sense. You ARE absolutely that special; precisely because you have overwhelming, direct evidence for the presence of your own mental processes, and barely any evidence at all for the presence of anyone else’s mental processes. The part of the simulation that is YOU is special because it’s far and away, no contest whatsoever, the densest part of the simulation. You can argue with me all you want, but it’s prima facie that you’re experiencing you more than you’re experiencing me, so any objection you might make is pretty hollow.

Of course I’m writing this based on what I’m experiencing, but if you’re not an NPC and you’re reading this, then it applies equally well to you. And there’s no reason you should believe there’s a fully simulated consciousness here (me) if the only thing you’re experiencing is just yet another wanky out-there philosophical internet blog blather. So I’m not going to flatter my(potentially-NPC)self by claiming to be real.

Enjoy the ride

People take comfort in a wide range of philosophical, theological, and ontological beliefs, such as life after death or a loving and supportive deity, but scientific psychology is skeptical. The therapeutic tension can be resolved without needing to solve the underlying philosophical questions by ontological depressive realism: As CBT theory has recognized that unrealistic optimism can be a part of optimal mental health, this can apply to ontological questions of life after death, etc. This will allow the scientific and clinical community to work with the full range of possible beliefs and (purported) experiences without needing to accept, or even address, whether these things are based on any sort of objective truth.

tl;dr: The only thing you need to do to make a convincing AI chat bot is not to fuck it up.

The goal of sentient AI research has been, in a sense, nearly universally misconstrued: the goal is to make something that we experience as sentient, not to make something that is sentient in an absolute sense. This is the only meaningful goal, really, because no one has direct access to anyone else’s sentience; the existence of any sentience or intelligence other than your own is only something you infer, not perceive.* In fact, note that the only widely used definitional test for intelligence is the Turing Test, which is, in fact, a test for precisely the criterion I’m stating here: whether other humans perceive the test subject as intelligent, not whether the test subject is objectively intelligent.

Why is this? Well, to start at the beginning:

Our brains are designed to take incoming sensory information and find patterns in it and organize it into percepts. Additionally, we have special circuits dedicated to finding percepts of conspecifics (i.e. other creatures like ourselves) and other non-conspecific creatures, because that was especially survival-relevant during our evolution.

Two other processes work together here. Matching a pattern is intrinsically rewarding (that’s why we like puzzles, for example). And, perceptual circuits are tuned to be overly “grabby” because it’s better to mistakenly think we saw a hungry predator and then realize we were wrong, then to mistakenly think we didn’t see a hungry predator and then realize we were wrong.

Because of those two things, we naturally anthropomorphize. To put it another way, anthropomorphization isn’t some extra weird hyperactive imagination that primitive peoples had (which is how it is traditionally presented), but rather represents the normal functioning of our brains in the context of the information that is available in a given circumstance. One good example is the Heider-Simmel Illusion, widely studied in psychology and neuroscience. Another is ELIZA, a shockingly simple chat bot created in the early 1960s based on Rogerian psychotherapy principles, which despite its simplicity is said to have convinced some people to share some of their innermost thoughts and feelings.

So, if you present someone with a system that can be perceived as an intelligence, it will be… unless some other factor intervenes to prevent that from happening. (If the patterns almost line up with a percept of a human, but are slightly off, then one experiences what’s known as “uncanny valley”. )

An important consequence of this is that the lower the bandwidth, the easier it is to be convincingly intelligent. ELIZA was convincingly intelligent when the sensory and conceptual bandwidth was limited to the territory of only a Rogerian psychotherapist communicating through a teletypewriter. A savvy modern person, expecting a cheesy chat bot, will have that expectation almost instantly confirmed, the illusion broken or never formed; a person in the 1960s encountering ELIZA unexpectedly, with no context for what a responsive terminal might represent other than another person, fell into that percept and in some cases stayed there quite a while. Indeed, the strategy behind Eugene Goostman, a bot widely but naïvely reported to have “passed the Turing Test” in 2014 was simply to reduce the bandwidth of available interactions by presenting the character as a 13-year-old Ukrainian boy with little cultural knowledge in common with the judges.

Thus, one major obstacle to a successful AI chat bot is that if the product is released and people expect a fully general intelligence, the conceptual bandwidth of the experience is too great and people will quickly find the holes.

Another obstacle stems from the “unless some other factor intervenes” caveat. As I said above, someone in the 1960s stumbling across ELIZA with no context to perceive an interactive conversation as originating with anything other than a human, will have that perception reinforced and will stick with it (for a little while, at least). But a person approaching ELIZA with the attitude “I know this is not intelligent, I’m not going to be impressed until it convinces me” is not going to be impressed. The key here, though, is that that’s not a problem with AI; that’s just how our perception of intelligence or sentience works. In Capgras Syndrome, a patient physically recognizes the appearance, voice, and other physical characteristics of a familiar person, but becomes unable to recognize their personality or sense of personhood and so believes they are an impostor or “body snatcher”. They will be quite explicit in admitting how convincing the substitution is, even admitting that specific speech patterns, facial expressions, personality quirks, etc. are consistent, but nevertheless the percept of “this is this person” never forms. (Well, never is an overstatement; people often recover from this delusion eventually.)

Now imagine that, for whatever reason, you believed that you were about to receive a text message from a chat bot and engage in a conversation, when in fact there was a live person at the other end of the line. How many text messages would it take for you to be guaranteed, 100% convinced that it was a person and not a bot? If you started out believing it was a bot, and you’re familiar with the idea of text bots, then I guarantee you that even if you came around quickly to the opinion that boy, this is a pretty good bot, I didn’t know they had become so advanced, it would still take a long time for you to fully change your mind and believe it was a human—or you might never! (You can try out a variation of this theme here.)

The point of these thought experiments is that the perception of an Other as sentient is, to a large extent, determined by one’s expectations. Although the human mind is promiscuous in recognizing sentience everywhere, overcoming an initial set of expectations about a percept of sentience (or non-sentience) is very difficult. And this poses another obstacle to the development of a convincing AI chat bot.

In conclusion, the task of AI can be reframed as the task of making a system that is perceived as being intelligent rather than the task of making a system that is intelligent in some objective sense, and this framing of the task is more consistent with the criterion that is already being used for success, as well as being more consistent with how the brain actually works. Framed thus, the task can theoretically be accomplished quite simply (not necessarily easily, but simply) if one is able to sufficiently narrow the scope of the interactions. However, it is a very difficult task to overcome a prior belief that the bot is unconvincing; and behavior flaws which clearly do not fit into the parameters of the percept also can quickly damage the illusion.

Just compiled this for a friend, putting it here for the world. She was trying to understand a paper so she could do a similar analysis and she was getting confused by what I was saying. It turned out that the paper used the wrong test: they used Kruskal-Wallace when they should have used Friedman, because they had repeated measurements in the same monkeys. It’s kind of a mess.

Basic single-factor statistical tests, “ordinary” parametric statistics:

| Parametric Tests | Independent Samples | Non-independent Samples (Repeated Measures) |

|---|---|---|

|

More than |

One-way ANOVA | One-way repeated-measures ANOVA |

| Two samples |

T-test | Paired T-test |

Basic single-factor statistical tests, non-parametric statistics:

| Non-Parametric Tests | Independent Samples | Non-independent Samples (Repeated Measures) |

|---|---|---|

|

More than |

Kruskal-Wallace Test | Friedman Test |

| Two samples |

Mann-Whitney U-test |

Wilcoxson Signed Rank Test |

I took a class on Cognitive Work Analysis from Dr. John D. Lee here at the UW. I found it very interesting. A friend was asking me for help writing cover letters, specifically how to edit it down to be short enough. I don’t have a lot of experience writing cover letters per se, but I wrote this procedure based on CWA. I’d be interested in feedback, of course.